I Analyzed 2,260 AI Use Cases. Here's What's Actually Happening.

What thousands of case studies reveal about how AI is actually being used today.

The Big Question

Everyone talks about AI hype. Every week there’s a new claim that AI will “revolutionize” some industry. But how much of this is real, and how much is marketing?

To find out, I went through more than 2,260 documented AI use cases from vendors, enterprises, startups, NGOs, and governments. I cleaned, normalized, and scored them, and in the process built what I call the AI Use Cases Library, an open, structured dataset that anyone can explore, filter, and learn from.

It’s now freely available on GitHub here.

And along the way, I discovered not just patterns of how AI is used, but also the messy, ironic, and sometimes hilarious reality of gathering data at this scale.

Why I Built This

My motivation was simple: transparency and AI literacy.

Most conversations about AI are anecdotal, vendor-driven, or focused on hype. I wanted evidence. Vendors release case studies, but they’re scattered across websites, written as marketing collateral, and often missing crucial details.

So I decided to bring them together into one structured, open resource, not just to count AI projects, but to understand how organizations actually use AI:

Which industries lead adoption?

What problems do they tackle?

Which tools and models are used?

What benefits do they actually report?

This isn’t about promoting any vendor or technology. It’s about creating a neutral resource for researchers, practitioners, educators, and anyone who wants to separate signal from noise.

How I Built It

The dataset combines use cases mainly from: AWS, Microsoft, Google Cloud, OpenAI, Anthropic, IBM, NVIDIA, as of v1.0.

The process:

1. Collection: Researched, scraped and downloaded thousands of vendor case studies. Not everything made the cut: although I started with over 3,000 entries, 690 were excluded because they were not really AI (many were classic data/analytics projects or “AI-powered” marketing buzzwords without actual AI or machine learning).

2. Cleansing & Deduplication: Some sources vanished (404 errors, links removed) mid-way through. Some organizations were listed multiple times. Vendors sometimes reused identical stories across multiple sections.

3. Enrichment: Expanded short descriptions into clear Problem → Approach → Impact narratives. Normalized tools to include Vendor, Product, and Model whenever possible. Tagged each case by Industry, Subindustry, and Domain.

Final tally:

2,260 curated cases in the main dataset

266 cases still in review

690 cases excluded (transparency matters)

Anecdotes From the Trenches

Building the library wasn’t just technical work, it was full of quirks, dilemmas, and ironies:

“AI-Powered” Doesn’t Mean AI

Vendors love the label. I saw cases where “AI-powered” simply meant a search box, dashboard/analytics, or a rules engine. I deliberately excluded these, even though they inflated vendor numbers.

Vanishing Success Stories

Some case studies went missing overnight. Entire sections of vendor websites returned 404 errors. I think that’s ironic, given that these are supposed to be “reference” success stories. Did the projects fail? Were they never real? We’ll never know.

The Scraping Irony

Several vendor sites actively blocked scraping. I find this both funny AND ironic, since these same vendors scrape internet data to train their models. Collecting data meant working carefully and sometimes manually.

Thin Documentation

A surprising number of use cases had almost no detail. Were they pilots that never scaled? Marketing placeholders? It’s hard to know, really.

Unexpected Gems

Some cases were fascinatingly unique, like Iceland’s project to preserve endangered languages, or NGOs using AI chatbots for farming advice in Africa. These weren’t from Fortune 500s, they were from small startups and nonprofits doing creative, important work.

What I Found

After the dust settled, some clear patterns emerged.

Leading Industries

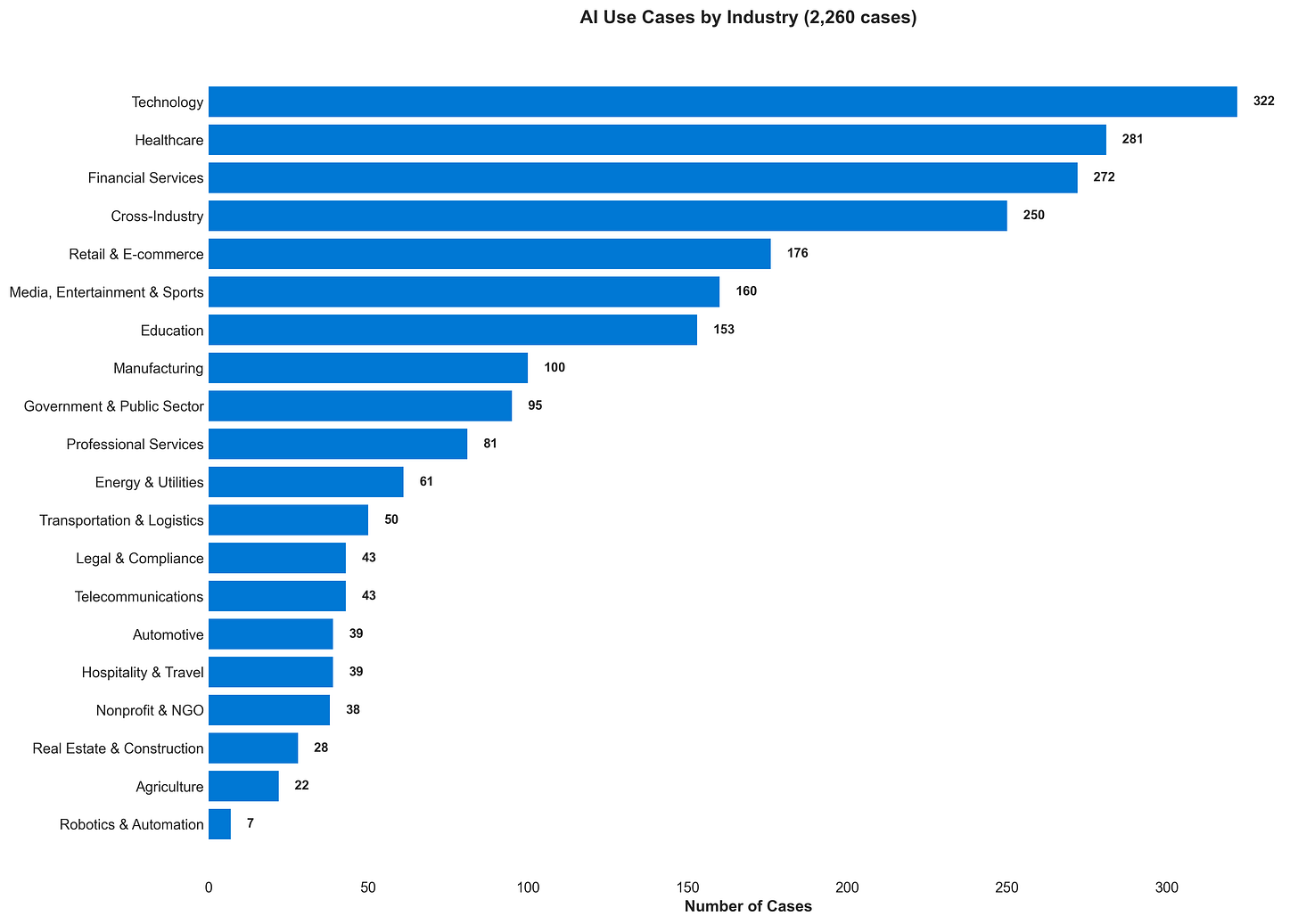

Technology, Financial Services, Healthcare, Retail, and Manufacturing were the biggest adopters. Government, Professional Services also showed strong momentum, not to mention Agriculture often with nonprofit and social-good angles.

Common Outcomes

Most projects weren’t flashy AGI experiments. They were practical: efficiency gains, cost savings, quality improvements, and new capabilities (like analyzing billions of images or supporting low-resource languages).

Vendors & Tools

Microsoft dominates with Copilot and Azure OpenAI. AWS Bedrock and SageMaker appear frequently in cloud-native projects. Google Vertex AI and Gemini are strong in tech and retail. OpenAI GPT-4/4o is widely used in customer support and content. NVIDIA is prevalent in ML/DL infrastructure. Claude models and API is loved by developers and strong in high-impact cases.

Surprises

Some of the most creative use cases came from NGOs and small startups, not just Fortune 500s. ESG and sustainability use cases are growing. Several vendor case studies vanished from websites mid-project, suggesting that not every AI pilot succeeds.

What I Actually Learned

Working through this dataset taught me as much about AI adoption as it did about the hype that surrounds it.

Vendors inflate everything. Many “AI-powered” implementations are closer to automation or data analytics. The label sells.

Publication pipelines shape perception. Microsoft and AWS publish far more stories than others, which makes them appear dominant. But this is also structural: as cloud providers with massive enterprise install-bases, it’s easier for them to claim use cases. Once Microsoft Copilot is enabled in Microsoft 365, every Word, Outlook, or Teams user suddenly becomes part of an “AI adoption story,” whether they’re deeply using it or not.

AI is messy and interdisciplinary. The neat boxes of “Healthcare” or “Finance” often fall apart when you look at real implementations. Cross-industry ambiguity is everywhere.

Most use cases are incremental, not revolutionary. Efficiency and productivity dominate. The transformative cases exist, but they’re the exception.

Documentation reveals maturity. Many case studies lacked depth, which is telling about whether these implementations actually scaled or succeeded.

What’s Inside

The repo includes:

Datasets: 2,260 curated cases, plus in-review and excluded datasets for transparency. Schema, taxonomy, and methodology documentation included.

Insights: Trend analysis, vendor comparisons (with disclaimers), and 25 featured cases that stood out.

Charts: Industry distribution, use case domains, outcomes, vendor presence, and a charts gallery for quick browsing.

Tools: A starter Jupyter notebook to explore the dataset yourself, with minimal dependencies and example workflows.

License: Code is MIT. Datasets, insights, and charts are CC-BY 4.0. Use it, remix it, share it. Just credit the source.

Why This Matters

This project is about transparency, literacy, and evidence.

By looking at documented case studies at scale, we can:

Spot real adoption patterns (not just marketing claims)

Understand how industries are deploying AI

See the gaps between what’s promised and what’s delivered

Give educators, students, and practitioners a neutral resource to build upon

Explore the Repo

AI Use Cases Library v1.0 on GitHub.

This is v1.0, but it’s just the beginning. Future releases will add industry-specific breakdowns, expanded visualizations, a search interface, and more enrichment. Contributions are welcome.

Final Thought

Reviewing 2,260 use cases reshaped how I think about AI adoption.

It’s not all hype, nor is it magic. It’s a patchwork of experiments, pilots, and enterprise-scale deployments. Some succeed, some quietly vanish, and many are still finding their place.

But one thing became clear: AI is already everywhere, not in some distant future, but in the processes, products, and services we interact with every day. And now, with this library, we can see a more realistic picture of what’s actually happening.

Explore it yourself. Question it. Build on it. That’s what it’s here for.

Thank you for sharing your work Abbas! I really like how you filtered out the AI-powered buzzword case studies. So many companies are pretending to use AI. I wish that it was much easier to find use cases from smaller companies because they are truly doing amazing work in overlooked verticals. I think a lot of their mental models can be studied and implemented at the enterprise level.